Spectrum sharing via machine learning

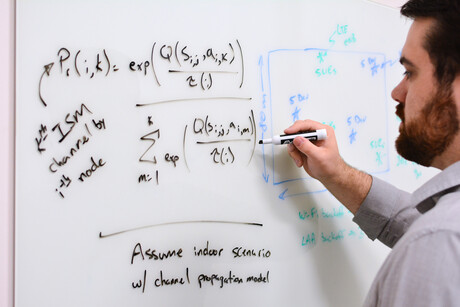

A machine learning formula developed by NIST could help 5G wireless network operators efficiently share communications frequencies.

Researchers at the US National Institute of Standards and Technology (NIST) have developed a mathematical formula that, computer simulations suggest, could help 5G and other wireless networks select and share communications frequencies about 5000 times more efficiently than trial-and-error methods.

The novel formula is a form of machine learning that selects a channel based on prior experience in a specific network environment. Described at a virtual online conference in May, the formula could be programmed into software on transmitters in many types of real-world networks.

The NIST formula is a way to help meet growing demand for wireless systems, including 5G, through the sharing of unlicensed frequency ranges… such as that used by Wi-Fi. The NIST study focuses on a scenario in which Wi-Fi competes with cellular systems for specific subchannels. What makes this scenario challenging is that these cellular systems are raising their data-transmission rates by using a method called License Assisted Access (LAA), which combines both unlicensed and licensed bands.

“This work explores the use of machine learning in making decisions about which frequency channel to transmit on,” NIST engineer Jason Coder said. “This could potentially make communications in the unlicensed bands much more efficient.”

The NIST formula enables transmitters to rapidly select the best subchannels for successful and simultaneous operation of Wi-Fi and LAA networks in unlicensed bands. The transmitters each learn to maximise the total network data rate without communicating with each other. The scheme rapidly achieves overall performance that is close to the result based on exhaustive trial-and-error channel searches.

The NIST research differs from previous studies of machine learning in communications by taking into account multiple network layers, the physical equipment and the channel access rules between base stations and receivers.

The formula is a ‘Q-learning’ technique, meaning it maps environmental conditions — such as the types of networks and numbers of transmitters and channels present — onto actions that maximise a value, known as Q, which returns the best reward.

By interacting with the environment and trying different actions, the algorithm learns which channel provides the best outcome. Each transmitter learns to select the channel that yields the best data rate under specific environmental conditions.

If both networks select channels appropriately, the efficiency of the combined overall network environment improves.

The method boosts data rates in two ways. Specifically, if a transmitter selects a channel that is not occupied, then the probability of a successful transmission rises, leading to a higher data rate. And if a transmitter selects a channel such that interference is minimised, then the signal is stronger, leading to a higher received data rate.

In the computer simulations, the optimum allocation method assigns channels to transmitters by searching all possible combinations to find a way to maximise the total network data rate. The NIST formula produces results that are close to the optimum one but in a much simpler process.

The study found that an exhaustive effort to identify the best solution would require about 45,600 trials, whereas the formula could select a similar solution by trying only 10 channels, just 0.02% of the effort.

The study addressed indoor scenarios, such as a building with multiple Wi-Fi access points and cellphone operations in unlicensed bands. Researchers now plan to model the method in larger-scale outdoor scenarios and conduct physical experiments to demonstrate the effect.

RFUANZ report: setting the frequency for success in 2025

Last year brought a lot of internal change for RFUANZ, but the association has hit the ground...

ARCIA update: an extended event calendar for 2025

With the addition of Tasmanian events and a conference in Adelaide in September, 2025 will see...

ARCIA update: plans for 2025

ARCIA will be holding a mixture of workshop, conference and networking events in 2025, in the...